The Silent Assassin of Model Drift - Why Industrial Inspection Needs Anomaly Detection and MLOps?

- Yu-Feng Wei

- Aug 27, 2025

- 5 min read

Updated: Sep 8, 2025

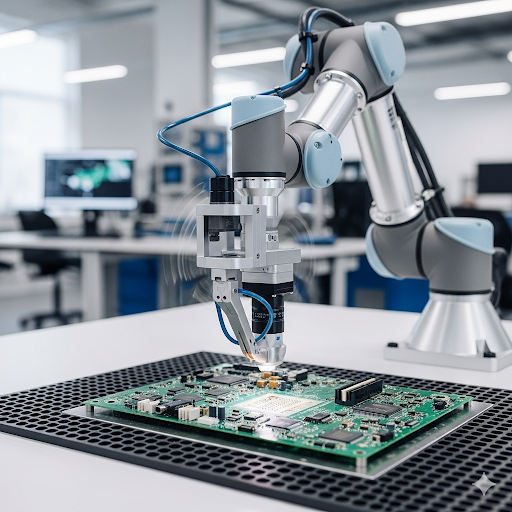

For industrial manufacturers, the promise of AI for quality control is undeniable. Reducing the demanding workload on human inspectors and improving efficiency are top priorities. However, a common misconception is that AI deployment can be a "set it and forget it" affair—a one-off software license and installation. In fact, it requires continuous partnership with AI engineers, offering ongoing model recalibration and maintenance. This isn't merely a business strategy; it's a technical necessity driven by the inherent challenges of AI in dynamic industrial environments, particularly the phenomenon of model drift.

Key Takeaways

Model drift is the inevitable degradation of AI model performance due to changes in data distribution.

In industrial manufacturing, drift is caused by process variations, new defect types, and sensor changes.

Unaddressed drift leads to missed defects (false negatives), unnecessary re-inspections (false positives), and significant financial and reputational damage.

Anomaly detection models are superior to defect classification models for industrial inspection due to their ability to identify novel defects by learning what "normal" looks like.

Continuous model recalibration and maintenance are crucial to counteract drift, ensure sustained accuracy, and maximize the ROI of AI in industrial inspection.

The Inevitability of Model Drift in Industrial Inspection

Model drift occurs when the performance of a deployed machine learning model degrades over time due to changes in the underlying data distribution it was trained on. For example, in semiconductor or PCB manufacturing, this isn't just a possibility; it's a certainty. Consider these scenarios:

Manufacturing Process Variations: Subtle shifts in machinery calibration, material suppliers, or environmental factors (temperature, humidity) can introduce minor, yet significant, changes to the appearance of PCBs, both good and defective.

New Defect Types: As technology evolves and production processes are refined, new types of defects might emerge that the original model was never trained to recognize.

Sensor Degradation/Calibration: Even the imaging equipment used for inspection can experience wear and tear, leading to slight alterations in image quality or lighting conditions.

Human Inspector Influence: When AI models are introduced to *replace* human inspectors, the feedback loop changes. Human inspectors naturally adapt to new defect patterns and provide implicit feedback. Without continuous calibration, the AI model lacks this adaptive capacity.

Any of these changes can cause your meticulously trained AI model to become outdated, leading to missed defects or an increase in false positives. We use the Printed Circuit Board (PCB) manufacturing as an illustrating example:

The Risks of Unaddressed Model Drift

The consequences of ignoring model drift in industrial inspection are significant and costly:

Increased False Negatives (Missed Defects): This is perhaps the most critical risk. An AI model experiencing drift might fail to identify genuine defects, leading to faulty PCBs reaching later stages of production or, worse, being shipped to customers. This results in costly rework, product recalls, and severe damage to brand reputation.

Increased False Positives (False Alarms): A drifting model might erroneously flag perfectly good PCBs as defective. This necessitates human re-inspection, negating the efficiency gains and reintroducing the workload AI was meant to alleviate. It can also lead to unnecessary scrap.

Reduced Operational Efficiency: Both false negatives and false positives disrupt the production flow, leading to bottlenecks, increased cycle times, and wasted resources.

Erosion of Trust in AI: If the AI system consistently underperforms due to drift, confidence in the technology will plummet, making future adoption of AI solutions difficult.

Financial Losses: Rework, scrap, warranty claims, and reputational damage all translate directly into significant financial losses.

Why Anomaly Detection Outperforms Defect Classification

Using the approach of custom anomaly detection models rather than traditional defect classification models, offering superior robustness against model drift.

Defect Classification Models: These models are trained on large datasets of known defect types. While effective for pre-defined issues, they struggle when encountering novel defects or subtle variations not present in their training data. Any new defect, or a significant change to an existing one, can cause them to fail spectacularly.

Anomaly Detection Models: In contrast, anomaly detection models are primarily trained on "good" or "normal" examples of PCBs. Their goal is to learn the intricate patterns that define a fault-free product. Anything that significantly deviates from these learned "normal" patterns is flagged as an anomaly.

This fundamental difference makes anomaly detection inherently more robust:

Adaptability to Novel Defects: An anomaly detection model doesn't need to have seen a specific defect type before to identify it as unusual. As long as a new defect causes a deviation from the learned normal state, it will be flagged. This is crucial in dynamic manufacturing environments where defect types can subtly evolve.

Focus on "Normal": By focusing on what is "good," the model is less susceptible to drift caused by minor variations in "good" parts, as long as these variations remain within the bounds of normalcy. Significant deviations, regardless of their specific type, are flagged.

Reduced Training Data Dependency: Training anomaly detection models often requires fewer labeled defect examples, as the primary training focuses on healthy samples, which are generally abundant.

The Necessity of MLOps with Continuous Recalibration and Maintenance

Even anomaly detection models are not entirely immune to drift. Over time, the definition of "normal" can subtly shift due to the aforementioned process variations. This is why MLOps support, including continuous model recalibration and maintenance service, is indispensable:

Proactive Monitoring: continuously monitor model performance, looking for early signs of degradation.

Regular Retraining: As process variations occur or new "normal" patterns emerge, periodically retrain and fine-tune your models with fresh data, ensuring they always reflect the current state of your production.

Adaptation to New Information: analyze flagged anomalies and new production data to refine the model, ensuring it remains highly accurate and effective.

Expert Oversight: a dedicated team that provides the crucial human intelligence needed to interpret model performance, diagnose issues, and implement necessary adjustments that an automated system alone cannot provide.

In the complex world of industrial manufacturing, treating AI as a one-off software license is akin to buying a precision machine and never performing maintenance. It might work for a short while, but eventually, performance will degrade. To truly leverage AI for sustainable improvement in inspection, a continuous, adaptive partnership is not just beneficial—it's essential.

References

Gama, J., Sebastião, R., & Rodrigues, P. P. (2014). Issues in evaluation of stream learning algorithms. In Proceedings of the 2014 IEEE International Conference on Data Mining Workshops (ICDMW) (pp. 535-542). IEEE.

Tsymbal, A. (2004). Feature selection for concept drift. In Proceedings of the European Conference on Machine Learning (pp. 419-430). Springer.

Chandola, V., Banerjee, A., & Kumar, V. (2009). Anomaly detection: A survey. ACM computing surveys (CSUR), 41(3), 1-58.