The Evolution of Synthetic Data Generation: From "Mock Data" to AI Intelligence

- Ting-Yuan Wang

- Aug 7, 2025

- 4 min read

Updated: Sep 8, 2025

Key Takeaways

Synthetic data generation has evolved from a niche research topic to an essential technology for AI development. The journey from statistical methods to AI-driven generation has made SDG more powerful, accessible, and valuable than ever before.

The bottom line: Organizations that embrace synthetic data will have a significant advantage in AI development, enabling them to build better models faster, at lower cost, and with fewer privacy concerns.

However, the key to success isn't just adopting SDG—it's understanding when and how to use it strategically. In our next article, we'll explore how to choose the right scale and tasks for SDG implementation, helping you make informed decisions about when synthetic data is the right solution for your AI projects.

As AI continues to advance, synthetic data will become even more critical. The organizations that master SDG strategy today will be the AI leaders of tomorrow.

Introduction: The Data Hunger Problem

AI systems are perpetually hungry for data. Whether it's training computer vision models to detect defects, developing autonomous vehicles, or building medical diagnostic tools, the demand for high-quality training data far exceeds our ability to collect it manually.

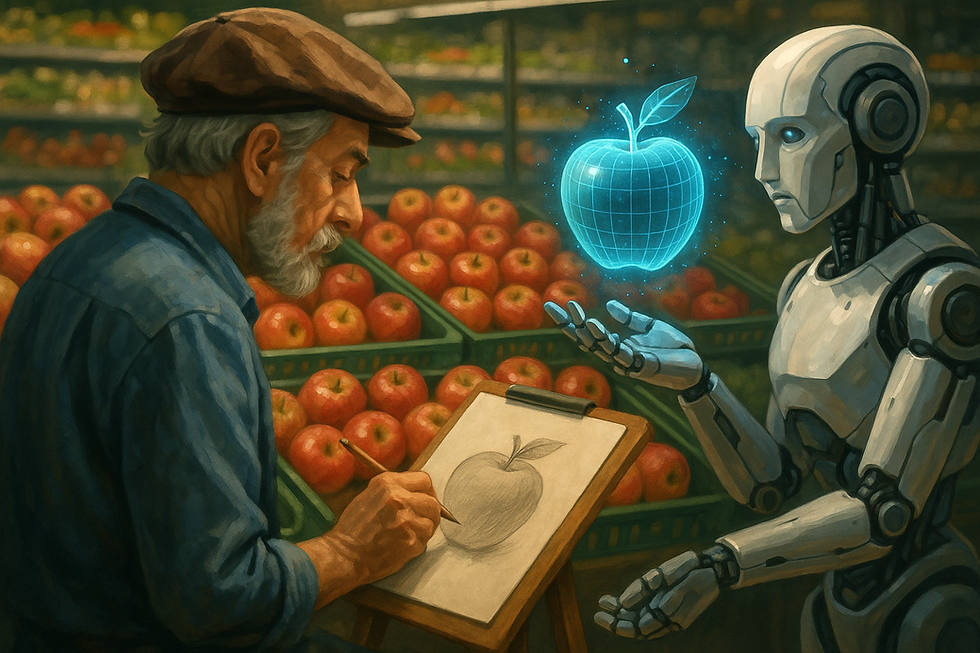

This is where synthetic data generation (SDG) comes in. What started as researchers using video game graphics to train AI models has evolved into a sophisticated technology that's reshaping how we approach machine learning. From early statistical methods to modern AI-driven generation, SDG has become a cornerstone of AI development.

The Three Generations: A Journey of Innovation

First Generation: The Privacy Revolution (1993-2010)

The story begins with Donald Rubin's 1993 breakthrough in statistical privacy protection. His Multiple Imputation method solved a critical problem: how to enable meaningful analysis while protecting individual privacy?

What it achieved:

Maintained statistical relationships while ensuring complete anonymity

Enabled research on sensitive data without privacy risks

Provided a solid foundation for data protection

The limitation: It worked well for structured data like spreadsheets, but couldn't handle complex visual or audio data.

Second Generation: The Gaming Engine Era (2010-2017)

As AI advanced, researchers turned to 3D rendering and game engines like Unity and Unreal. The concept was simple: if we can create virtual worlds, why not use them to train AI?

The breakthrough: Instead of trying to make simulations perfectly realistic, researchers discovered that randomizing environmental parameters actually improved AI performance in real-world applications.

Key innovations:

Domain Randomization: Randomizing lighting, textures, and object positions during training

Domain Adaptation: Using AI to bridge the gap between synthetic and real data

The challenge: While effective, this approach required significant engineering expertise and was expensive to implement.

Third Generation: AI Creates AI (2014-Present)

The game-changer came with Generative Adversarial Networks (GANs) in 2014, followed by Diffusion Models and Large Language Models. These technologies enabled AI to generate its own training data.

What changed:

GANs: Two AI systems competing to create and detect mock data

Diffusion Models: More stable generation through gradual noise removal

Large Language Models: Text generation that's nearly indistinguishable from human writing

The advantage: These methods can generate high-quality data without complex engineering, making SDG accessible to more organizations.

Why Synthetic Data Matters: The Business Case

Solving the Data Scarcity Crisis

The problem: Many AI applications require data that's either too expensive, too rare, or too sensitive to collect manually.

The solution: SDG can generate unlimited amounts of training data, making AI development possible in scenarios where it was previously impossible.

Real-world impact:

Medical imaging: Generating rare disease cases for training

Autonomous vehicles: Creating accident scenarios for safety testing

Manufacturing: Producing defect images for quality control

Reducing Costs and Accelerating Development

Traditional approach: Manual data collection and labeling can cost millions and take months.

SDG approach: Automated generation with perfect annotations, reducing costs by 60-80% and accelerating development by 3-5x.

Protecting Privacy and Compliance

The challenge: Many industries face strict privacy regulations that limit data sharing.

The solution: SDG generates data that maintains statistical properties without containing personal information, enabling collaboration while ensuring compliance.

The Future: Making SDG Work for Your Business

The Next Challenge: Strategic Implementation

While SDG technology has matured significantly, the next frontier isn't just about having the technology—it's about knowing when and how to use it effectively. Not every AI project benefits equally from synthetic data, and the success of SDG implementation depends heavily on understanding the relationship between model scale, task requirements, and data characteristics.

Key Questions Organizations Must Answer

Model Scale Matters:

Small models often need highly targeted, specific synthetic data

Large models require diverse, scalable data generation

The choice of SDG approach should match your model's complexity

Task-Specific Considerations:

Visual classification vs. object detection vs. segmentation

Quantitative vs. reasoning-based tasks

Each task type has different SDG requirements and success patterns

Strategic Decision Framework:

When to build your own SDG pipeline vs. buying synthetic data

Cost-benefit analysis: data generation vs. data collection vs. data purchase

Risk assessment for different SDG approaches

The Road Ahead

The future of SDG isn't just about better technology—it's about smarter implementation. Organizations that understand how to match SDG strategies with their specific AI needs will gain significant competitive advantages.

As we move forward, the focus will shift from "Can we generate synthetic data?" to "Should we generate synthetic data for this specific use case?" This strategic approach will be crucial for maximizing ROI and ensuring successful AI deployments.